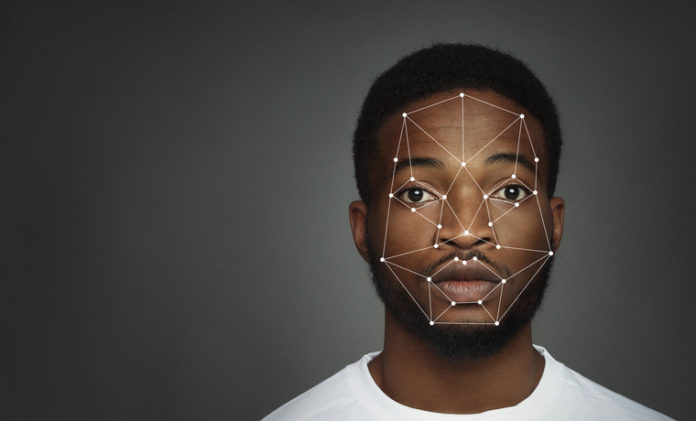

Facial recognition is new to HCM technology, but the Covid-19 pandemic has led more companies to adopt it as they screen and manage their employees’ return to work. In particular, they see it as a component of passive monitoring efforts, where tools automatically scan workplaces and people to monitor temperature, occupancy and access.

But the technology has some baggage, even aside from the obvious privacy issues. Last year, for example, the National Institute of Standards and Technology reported that facial recognition systems used by law enforcement misidentified African-American and Asian subjects 10 to 100 times more often than they did Caucasians.

A new rub with facial recognition tech: Developers quietly build race-detection programs. What could go wrong? #HR #HRTech Share on XNow there’s a new rub: The Wall Street Journal reports that some businesses have begun using software that detects race as they study customer behavior.

The field is “still developing,” and just how the technology will be used down the road is unclear. “Use of the software is fraught, as researchers and companies have begun to recognize its potential to drive discrimination, posing challenges to widespread adoption,” the Journal said.

Racial Recognition, Not Facial Recognition

Called “facial analysis,” as opposed to “facial recognition,” these tools use AI to scan everything from eyebrows to cheekbones, analyze their shapes and other characteristics, and determine gender, age, race and even emotions and attractiveness.

Businesses use the software in ad targeting, market research and identity confirmation, the Journal said. One vendor explained retailers use its product to collect statistical data about store traffic. Another said dating sites use its race-classification tool, which sells for about $1,200, to make sure profiles are truthful.

While some researchers say these tools can be “startingly accurate,” others argue they shouldn’t be used at all. Technology that includes race-detection features could pose a number of hidden risks, they say. For example, a package that reads a candidate’s facial expressions during job interviews could inadvertently discriminate if it begins factoring in race.

It’s been widely accepted that workers have limited rights to privacy when they’re using company-supplied equipment. However, the question becomes especially sensitive when workers believe they’re surrendering more privacy than they meant to simply because they’ve clocked in. The concern increases even more when you add the possibility of systems categorizing employees by race.

Sign up for our newsletter here.

Image: iStock